A nice-to-have feature when logging to terminals is to have the output colourised, typically according to the severity of individual messages. This is fairly straightforward to achieve, but the following points have to be borne in mind:

- On Unix, Linux and OS X, terminals support ANSI escape codes for colourisation, and so a single solution should be able to support all of these platforms.

- Windows does not support ANSI escape codes (though MS-DOS used to, using the ANSI.SYS driver), but does provide an API which can be used to achieve a similar effect.

- When the terminal output is redirected to a file, colourisation should be disabled.

In this post, I’ll present a fairly simple handler which considers each of the above points in its design and implementation. I’ll be adding this handler to the logutils package soon.

We’ll assume that an entire formatted message will be colourised. If you need to colourise only certain parts of the message, you can adapt the solution presented here fairly easily – perhaps by adding a specialised formatter which is aware of ANSI escape codes.

We’ll also assume that we only want to be able to set background colour, foreground colour and foreground intensity, and that we’ll only want to set eight colours – red, green, blue, cyan, magenta, yellow, black and white. That’s more or less the lowest common denominator afforded us by ANSI escape sequences. If you have more specialised needs which are supported by your environment, you should be able to adapt the solution presented here, most likely just by subclassing.

It seems appropriate to base the handler on logging.StreamHandler. In addition to importing logging, we’ll need to import os (so that we can take appropriate action on Windows) and ctypes (so we can access the Windows console API needed to colourise terminal output).

This is how we start the handler implementation:

import ctypes

import logging

import os

class ColorizingStreamHandler(logging.StreamHandler):

@property

def is_tty(self):

isatty = getattr(self.stream, 'isatty', None)

return isatty and isatty()

def emit(self, record):

try:

message = self.format(record)

stream = self.stream

if not self.is_tty:

stream.write(message)

else:

self.output_colorized(message)

stream.write(getattr(self, 'terminator', '\n'))

self.flush()

except (KeyboardInterrupt, SystemExit):

raise

except:

self.handleError(record)

Noting that we need to determine when terminal output is piped to a file, we provide a property called is_tty on the handler which says whether the handler’s stream is a terminal. If it is, we’ll use a method called output_colorized (whose implementation will be platform dependent, and discussed shortly) to do the colourised output; otherwise, we just write the message to the stream.

In order to add colour to the message, we’ll override the format method of the handler:

def format(self, record):

message = logging.StreamHandler.format(self, record)

if self.is_tty:

# Don't colorize any traceback

parts = message.split('\n', 1)

parts[0] = self.colorize(parts[0], record)

message = '\n'.join(parts)

return message

We first call the base class format method to do the actual formatting according to whatever formatter is set for the handler. If we are writing to a terminal, we extract the first line of the formatted text (which could contain an exception traceback), colourise that first line and add the traceback without any colouring.

I did consider putting the colourising logic into a specialised Formatter subclass, but decided against it, because:

- Colourising is only done when writing to a terminal; a formatter would be unaware of specific output destinations.

- Placing the colourising logic in a handler would allow a formatter to be shared with other handlers.

- It’s simpler to keep everything in one place.

To do the grunt work, we’ll define the colorize helper method together with some data which drives it. We’ll make it the method’s job to add ANSI escape sequence to colour the message, even though Windows does not support ANSI escape sequences natively - we’ll just have to deal with it in the implementation of output_colorized, which we’ll come to a little later.

Let’s discuss ANSI escape sequences briefly now – for more information you can consult this article. Basically, colourising is done by placing a special character sequence in the character stream sent to the terminal. The sequence always starts with an ESC (ASCII 27) followed by a left bracket character ([). The sequence always ends with an ‘m’. In between is one or more integer parameters (as printed characters in decimal format, separated by semicolons). These parameters have the following meanings:

- 0 – reset background and foreground colours and intensity to the defaults.

- 1 – set the foreground intensity on.

- between 30 and 37 – set the foreground colour to one of the values in this table, after subtracting 30 from the parameter.

- between 40 and 47 - set the background colour to one of the values in this table, after subtracting 40 from the parameter.

There are other parameters with other meanings in the ANSI specification, but we won’t concern ourselves with those as they aren’t relevant to our simple colourisation scheme.

So, we use a simple color_map dictionary that allows mapping colour names to integer colour values. We also use a level_map dictionary which maps logging levels to a tuple encoding the background colour, foreground colour (colour names) and intensity (a Boolean). If no background colour is specified (None) , the default is used. Because Windows terminals usually have a dark background and Linux terminals a light background, the level_map is defined slightly differently on Windows. We also define the escape sequence beginning (called the CSI for Control Sequence Introducer/Initiator), and the reset sequence.

# color names to indices

color_map = {

'black': 0,

'red': 1,

'green': 2,

'yellow': 3,

'blue': 4,

'magenta': 5,

'cyan': 6,

'white': 7,

}

#levels to (background, foreground, bold/intense)

if os.name == 'nt':

level_map = {

logging.DEBUG: (None, 'blue', True),

logging.INFO: (None, 'white', False),

logging.WARNING: (None, 'yellow', True),

logging.ERROR: (None, 'red', True),

logging.CRITICAL: ('red', 'white', True),

}

else:

level_map = {

logging.DEBUG: (None, 'blue', False),

logging.INFO: (None, 'black', False),

logging.WARNING: (None, 'yellow', False),

logging.ERROR: (None, 'red', False),

logging.CRITICAL: ('red', 'white', True),

}

csi = '\x1b['

reset = '\x1b[0m'

def colorize(self, message, record):

if record.levelno in self.level_map:

bg, fg, bold = self.level_map[record.levelno]

params = []

if bg in self.color_map:

params.append(str(self.color_map[bg] + 40))

if fg in self.color_map:

params.append(str(self.color_map[fg] + 30))

if bold:

params.append('1')

if params:

message = ''.join((self.csi, ';'.join(params),

'm', message, self.reset))

return message

Once the mappings and constants have been defined, the colorize method is very simple. If the record’s level is in the level map, we get the tuple for background and foreground colours and intensity, get the appropriate commands and construct the escape sequence. We return the passed in message, bracketed by the initial escape sequence which encodes the appropriate colours and intensity for the record’s level, and the final escape sequence which resets the terminal to default colours and intensity.

The implementation thus far adds ANSI escape sequences to messages to colourise them. These are natively supported on Unix, Linux and OS X, but not so on Windows. We therefore define the output_colorized message, which is called from emit, differently on NT and non-NT platforms. The non-NT implementation is very simple: assuming ANSI support is native, we just write the colourised message to the output stream. For Windows, we need to interpret the ANSI escape sequences and implement them with Win32 API calls. Here are the implementations:

if os.name != 'nt':

def output_colorized(self, message):

self.stream.write(message)

else:

import re

ansi_esc = re.compile(r'\x1b\[((\d+)(;(\d+))*)m')

nt_color_map = {

0: 0x00, # black

1: 0x04, # red

2: 0x02, # green

3: 0x06, # yellow

4: 0x01, # blue

5: 0x05, # magenta

6: 0x03, # cyan

7: 0x07, # white

}

def output_colorized(self, message):

parts = self.ansi_esc.split(message)

write = self.stream.write

h = None

fd = getattr(self.stream, 'fileno', None)

if fd is not None:

fd = fd()

if fd in (1, 2): # stdout or stderr

h = ctypes.windll.kernel32.GetStdHandle(-10 - fd)

while parts:

text = parts.pop(0)

if text:

write(text)

if len(parts) > 4:

params = parts[0]

parts = parts[4:]

if h is not None:

params = [int(p) for p in params.split(';')]

color = 0

for p in params:

if 40 <= p <= 47:

color |= self.nt_color_map[p - 40] << 4

elif 30 <= p <= 37:

color |= self.nt_color_map[p - 30]

elif p == 1:

color |= 0x08 # foreground intensity on

elif p == 0: # reset to default color

color = 0x07

else:

pass # error condition ignored

ctypes.windll.kernel32.SetConsoleTextAttribute(h, color)

Because the integer values for ANSI colour codes differ slightly from those for Windows, we use an nt_color_map dictionary to map between the two. We use regular expressions to split the colourised message into escape sequences and literal text to be output. (Note that because there are four groups in the regular expression, the list will contain four entries for each ANSI sequence found.)

In order to colourise the text in the terminal, we need to obtain a Windows console handle for the output stream, using the GetStdHandle Win32 API. If this handle is not obtainable, literal text is output but the ANSI escape sequences are ignored. If the handle is obtained, then the ANSI sequences are converted to the corresponding Win32 attribute values and the SetConsoleTextAttribute API is used to write them to the console.

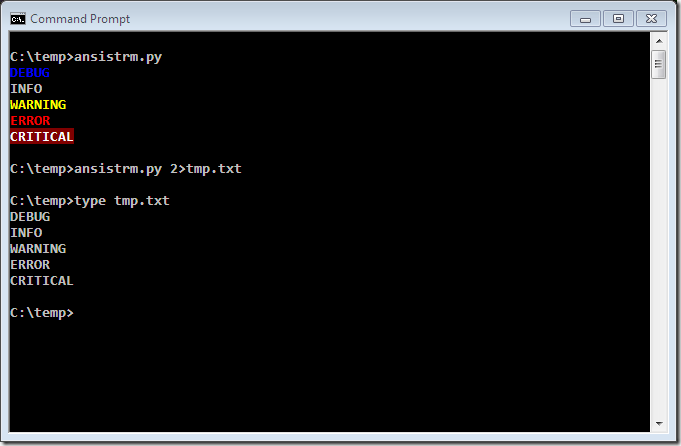

The whole thing is available a ready-to-run form in this gist, which should run on all Pythons >= 2.5 (and Python 2.4, too, if you have ctypes installed). Here are some screenshots of running the scripts on Ubuntu, Windows 7, Windows 2008, and Mac OS X, showing the expected results for both terminal output and output piped to a file.

Ubuntu Jaunty:

Windows 7:

Windows 2008 (Python 2.4):

Mac OS X:

If you want different colour settings, you can probably achieve this just by changing the level_map contents. If you want to insert colourisation for just part of your messages, you could change the logic in the colorize and/or format methods. Of course, you can also override output_colorized and colorize if you need to support additional ANSI escape sequences.

Your comments are welcome, particularly any suggestions for improvements. Thanks for reading.